US Market SentimentThe "US Market Sentiment" indicator is designed to provide insights into the sentiment of the US market. It is based on the calculation of an oscillator using data from the High Yield Ratio. This indicator can be helpful in assessing the overall sentiment and potential market trends.

Key Features:

Trend Direction: The indicator helps identify the general trend direction of market sentiment. Positive values indicate a bullish sentiment, while negative values indicate a bearish sentiment. Traders and investors can use this information to understand the prevailing market sentiment.

Overbought and Oversold Levels: The indicator can highlight overbought and oversold conditions in the market. When the oscillator reaches high positive levels, it suggests excessive optimism and a potential downside correction. Conversely, high negative levels indicate excessive pessimism and the possibility of an upside rebound.

Divergence Analysis: The indicator can reveal divergences between the sentiment oscillator and price movements. Divergences occur when the price reaches new highs or lows, but the sentiment oscillator fails to confirm the move. This can signal a potential trend reversal or weakening of the current trend.

Confirmation of Trading Signals: The "US Market Sentiment" indicator can be used to confirm other trading signals or indicators. For instance, if a momentum indicator generates a bullish signal, a positive reversal in the sentiment oscillator can provide additional confirmation for the trade.

Usage and Interpretation:

Positive values of the "US Market Sentiment" indicate a bullish sentiment, suggesting potential buying opportunities.

Negative values suggest a bearish sentiment, indicating potential selling or shorting opportunities.

Extreme positive or negative values may signal overbought or oversold conditions, respectively, and could precede a market reversal.

Divergences between the sentiment oscillator and price trends may suggest a potential change in the current market direction.

Traders and investors can combine the "US Market Sentiment" indicator with other technical analysis tools to enhance their decision-making process and gain deeper insights into the US market sentiment.

在腳本中搜尋"deep股票代码"

Step RSI [Loxx]Enhanced Moving Average Calculation with Stepped Moving Average and the Advantages over Regular RSI

Technical analysis plays a crucial role in understanding and predicting market trends. One popular indicator used by traders and analysts is the Relative Strength Index (RSI). However, an enhanced approach called Stepped Moving Average, in combination with the Slow RSI function, offers several advantages over regular RSI calculations.

Stepped Moving Average and Moving Averages:

The Stepped Moving Average function serves as a crucial component in the calculation of moving averages. Moving averages smooth out price data over a specific period to identify trends and potential trading signals. By employing the Stepped Moving Average function, traders can enhance the accuracy of moving averages and make more informed decisions.

Stepped Moving Average takes two parameters: the current RSI value and a size parameter. It computes the next step in the moving average calculation by determining the upper and lower bounds of the moving average range. It accomplishes this by adjusting the values of smax and smin based on the given RSI and size.

Furthermore, Stepped Moving Average introduces the concept of a trend variable. By comparing the previous trend value with the current RSI and the previous upper and lower bounds, it updates the trend accordingly. This feature enables traders to identify potential shifts in market sentiment and make timely adjustments to their trading strategies.

Advantages over Regular RSI:

Enhanced Range Boundaries:

The inclusion of size parameters in Stepped Moving Average allows for more precise determination of the upper and lower bounds of the moving average range. This feature provides traders with a clearer understanding of the potential price levels that can influence market behavior. Consequently, it aids in setting more effective entry and exit points for trades.

Improved Trend Identification:

The trend variable in Stepped Moving Average helps traders identify changes in market trends more accurately. By considering the previous trend value and comparing it to the current RSI and previous bounds, Stepped Moving Average captures trend reversals with greater precision. This capability empowers traders to respond swiftly to market shifts and potentially capture more profitable trading opportunities.

Smoother Moving Averages:

Stepped Moving Average's ability to adjust the moving average range bounds based on trend changes and size parameters results in smoother moving averages. Regular RSI calculations may produce jagged or erratic results due to abrupt market movements. Stepped Moving Average mitigates this issue by dynamically adapting the range boundaries, thereby providing traders with more reliable and consistent moving average signals.

Complementary Functionality with Slow RSI:

Stepped Moving Average and Slow RSI function in harmony to provide a comprehensive trading analysis toolkit. While Stepped Moving Average refines the moving average calculation process, Slow RSI offers a more accurate representation of market strength. The combination of these two functions facilitates a deeper understanding of market dynamics and assists traders in making better-informed decisions.

Extras

-Alerts

-Signals

Volatility SpeedometerThe Volatility Speedometer indicator provides a visual representation of the rate of change of volatility in the market. It helps traders identify periods of high or low volatility and potential trading opportunities. The indicator consists of a histogram that depicts the volatility speed and an average line that smoothes out the volatility changes.

The histogram displayed by the Volatility Speedometer represents the rate of change of volatility. Positive values indicate an increase in volatility, while negative values indicate a decrease. The height of the histogram bars represents the magnitude of the volatility change. A higher histogram bar suggests a more significant change in volatility.

Additionally, the Volatility Speedometer includes a customizable average line that smoothes out the volatility changes over the specified lookback period. This average line helps traders identify the overall trend of volatility and its direction.

To enhance the interpretation of the Volatility Speedometer, color zones are used to indicate different levels of volatility speed. These color zones are based on predefined threshold levels. For example, green may represent high volatility speed, yellow for moderate speed, and fuchsia for low speed. Traders can customize these threshold levels based on their preference and trading strategy.

By monitoring the Volatility Speedometer, traders can gain insights into changes in market volatility and adjust their trading strategies accordingly. For example, during periods of high volatility speed, traders may consider employing strategies that capitalize on price swings, while during low volatility speed, they may opt for strategies that focus on range-bound price action.

Adjusting the inputs of the Volatility Speedometer indicator can provide valuable insights and flexibility to traders. By modifying the inputs, traders can customize the indicator to suit their specific trading style and preferences.

One input that can be adjusted is the "Lookback Period." This parameter determines the number of periods considered when calculating the rate of change of volatility. Increasing the lookback period can provide a broader perspective of volatility changes over a longer time frame. This can be beneficial for swing traders or those focusing on longer-term trends. On the other hand, reducing the lookback period can provide more responsiveness to recent volatility changes, making it suitable for day traders or those looking for short-term opportunities.

Another adjustable input is the "Volatility Measure." In the provided code, the Average True Range (ATR) is used as the volatility measure. However, traders can choose other volatility indicators such as Bollinger Bands, Standard Deviation, or custom volatility measures. By experimenting with different volatility measures, traders can gain a deeper understanding of market dynamics and select the indicator that best aligns with their trading strategy.

Additionally, the "Thresholds" inputs allow traders to define specific levels of volatility speed that are considered significant. Modifying these thresholds enables traders to adapt the indicator to different market conditions and their risk tolerance. For instance, increasing the thresholds may highlight periods of extreme volatility and help identify potential breakout opportunities, while lowering the thresholds may focus on more moderate volatility shifts suitable for range trading or trend-following strategies.

Remember, it is essential to combine the Volatility Speedometer with other technical analysis tools and indicators to make informed trading decisions.

ICT Commitment of Traders° by toodegreesDescription:

The Commitment of Traders (COT) is a valuable raw data report released weekly by the Commodity Futures Trading Commission (CFTC). This report offers insights into the current long and short positions of three key market entities:

Commercial Traders ( usually represented in red )

Large Traders ( typically depicted in green )

Small Speculator Traders ( commonly shown in blue )

The concept of utilizing the COT data as a strategic trading tool was first introduced by Larry Williams, who emphasized the importance of monitoring Commercial Speculators – large corporate producers or consumers of commodities.

The Inner Circle Trader (ICT) prompts us to delve deeper into this data. While we can easily determine their Net Position (also referred to as the Main Program) by subtracting Commercial Short Positions from the Commercial Long Positions, this calculation doesn't reveal their ongoing Hedge Program .

Merely following the Main Program won't provide a trading edge. Aligning with the Hedge Program can be an invaluable weapon in your trading arsenal.

The Commercial Speculators' Hedge Program can be unveiled by examining the highest and lowest reading of their Net Position over a chosen time period and setting a new "zero line" between these extremes. This process generates a novel "COT Graph" providing a detailed understanding of the Commercial Speculators' current market activity.

When the Hedge Program, Seasonality, and Open Interest are cross-referenced with Institutional Orderflow, a trader can construct a very clear medium-to-long-term market narrative.

Features:

Access COT Data for the Commercial Speculators via Tradingview's reliable data source

Automate calculations and display the 3-month, 6-month, 12-month, 2-year, and 3-year Hedge Program

Define your own Custom Time Range for the Hedge Program

Display the Main Program and all Hedge Programs in an easy-to-understand table format

Additionally, by following the included instructions, you can augment your table with COT data from multiple markets. This extra information can help monitor correlated markets and develop a more robust market narrative:

Open Interest Profile [Fixed Range] - By LeviathanThis script generates an aggregated Open Interest profile for any user-selected range and provides several other features and tools, such as OI Delta Profile, Positive Delta Levels, OI Heatmap, Range Levels, OIWAP, POC and much more.

The indicator will help you find levels of interest based on where other market participants are opening and closing their positions. This provides a deeper insight into market activity and serves as a foundation for various different trading strategies (trapped traders, supply and demand, support and resistance, liquidity gaps, imbalances,liquidation levels, etc). Additionally, this indicator can be used in conjunction with other tools such as Volume Profile.

Open Interest (OI) is a key metric in derivatives markets that refers to the total number of unsettled or open contracts. A contract is a mutual agreement between two parties to buy or sell an underlying asset at a predetermined price. Each contract consists of a long side and a short side, with one party consenting to buy (long) and the other agreeing to sell (short). The party holding the long position will profit from an increase in the asset's price, while the one holding the short position will profit from the price decline. Every long position opened requires a corresponding short position by another market participant, and vice versa. Although there might be an imbalance in the number of accounts or traders holding long and short contracts, the net value of positions held on each side remains balanced at a 1:1 ratio. For instance, an Open Interest of 100 BTC implies that there are currently 100 BTC worth of longs and 100 BTC worth of shorts open in the market. There might be more traders on one side holding smaller positions, and fewer on the other side with larger positions, but the net value of positions on both sides is equivalent - 100 BTC in longs and 100 BTC in shorts (1:1). Consider a scenario where a trader decides to open a long position for 1 BTC at a price of $30k. For this long order to be executed, a counterparty must take the opposite side of the contract by placing a short order for 1 BTC at the same price of $30k. When both long and short orders are matched and executed, the Open Interest increases by 1 BTC, indicating the introduction of this new contract to the market.

The meaning of fluctuations in Open Interest:

- OI Increase - signifies new positions entering the market (both longs and shorts).

- OI Decrease - indicates positions exiting the market (both longs and shorts).

- OI Flat - represents no change in open positions due to low activity or a large number of contract transfers (contracts changing hands instead of being closed).

Typically, we monitor Open Interest in the form of its running value, either on a chart or through OI Delta histograms that depict the net change in OI for each price bar. This indicator enhances Open Interest analysis by illustrating the distribution of changes in OI on the price axis rather than the time axis (akin to Volume Profiles). While Volume Profile displays the volume that occurred at a given price level, the Open Interest Profile offers insight into where traders were opening and closing their positions.

How to use the indicator?

1. Add the script to your chart

2. A prompt will appear, asking you to select the “Start Time” (start of the range) and the “End Time” (end of the range) by clicking anywhere on your chart.

3. Within a few seconds, a profile will be generated. If you wish to alter the selected range, you can drag the "Start Time" and "End Time" markers accordingly.

4. Enjoy the script and feel free to explore all the settings.

To learn more about each input in indicator settings, please read the provided tooltips. These can be accessed by hovering over or clicking on the ( i ) symbol next to the input.

MTF Stationary Extreme IndicatorThe Multiple Timeframe Stationary Extreme Indicator is designed to help traders identify extreme price movements across different timeframes. By analyzing extremes in price action, this indicator aims to provide valuable insights into potential overbought and oversold conditions, offering opportunities for trading decisions.

The indicator operates by calculating the difference between the latest high/low and the high/low a specified number of periods back. This difference is expressed as a percentage, allowing for easy comparison and interpretation. Positive values indicate an increase in the extreme, while negative values suggest a decrease.

One of the unique features of this indicator is its ability to incorporate multiple timeframes. Traders can choose a higher timeframe to analyze alongside the current timeframe, providing a broader perspective on market dynamics. This feature enables a comprehensive assessment of extreme price movements, considering both short-term and longer-term trends.

By observing extreme movements on different timeframes, traders can gain deeper insights into market conditions. This can help in identifying potential areas of confluence or divergence, supporting more informed trading decisions. For example, when extreme movements align across multiple timeframes, it may indicate a higher probability of a significant price reversal or continuation.

To use the Multiple Timeframe Stationary Extreme Indicator effectively, traders should consider a few key points:

- Choose the Timeframes : Select the appropriate timeframes based on your trading strategy and objectives. The current timeframe represents the focus of your analysis, while the higher timeframe provides a broader context. Ensure the chosen timeframes align with your trading style and the asset you are trading.

- Interpret Extreme Movements : Pay attention to extreme movements that breach certain levels. Values above zero indicate a rise in the extreme, potentially signaling overbought conditions. Conversely, values below zero suggest a decrease, potentially indicating oversold conditions. Use these extreme movements as potential entry or exit signals, in conjunction with other indicators or confirmation signals.

- Validate with Price Action : Confirm the extreme movements observed on the indicator with price action. Look for confluence between the indicator's extreme levels and key support or resistance levels, trendlines, or chart patterns. This can provide added confirmation and increase the reliability of the signals generated by the indicator.

- Consider Volatility Filters : The indicator can be enhanced by incorporating volatility filters. By adjusting the sensitivity of the extreme differences calculation based on market volatility, traders can adapt the indicator to different market conditions. Higher volatility may require a longer lookback period, while lower volatility may call for a shorter one. Experiment with volatility filters to fine-tune the indicator's performance.

- Combine with Other Analysis Techniques : The Multiple Timeframe Stationary Extreme Indicator is most effective when used as part of a comprehensive trading strategy. Combine it with other technical analysis tools, such as trend indicators, oscillators, or chart patterns, to form a well-rounded approach. Consider risk management techniques and money management principles to optimize your trading strategy.

---------------------------------------------------------------------------------------------------------------------------------------------------------------

Remember that trading indicators, including the Multiple Timeframe Stationary Extreme Indicator, should not be used in isolation. They serve as tools to assist in decision-making, but they require proper context, analysis, and confirmation. Always conduct thorough analysis and consider market conditions, news events, and other relevant factors before making trading decisions.

It's recommended to backtest the indicator on historical data to assess its performance and effectiveness for your trading approach. This will help you understand its strengths and limitations, allowing you to refine and optimize your usage of the indicator.

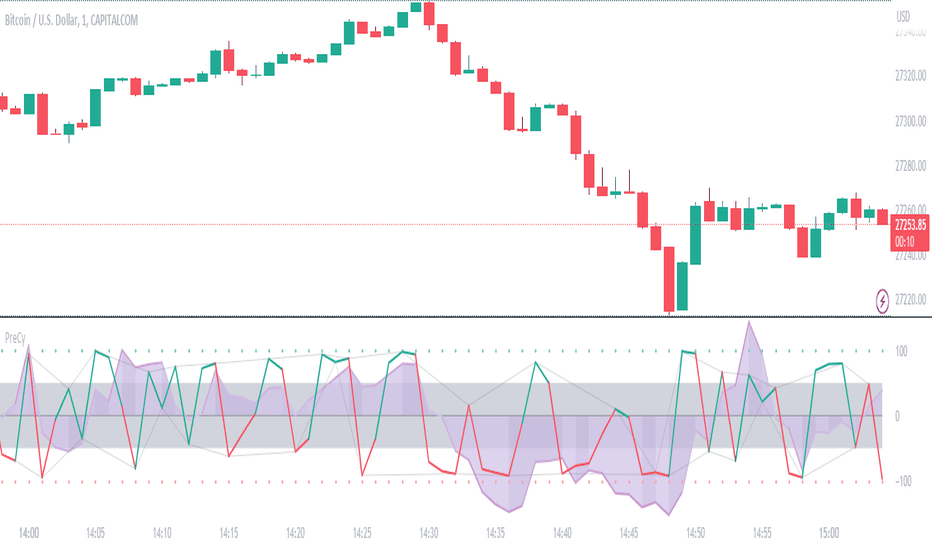

Buying/Selling Pressure Cycle (PreCy)No lag estimation of the buying/selling pressure for each candle.

----------------------------------------------------------------------------------------------------

WHY PreCY?

How much bearish pressure is there behind a group of bullish candles ?

Is this bearish pressure increasing?

When might it overcome the bullish pressure?

Those were my questions when I started this indicator. It lead me through the rabbit hole, where I discovered some secrets about the market. So I pushed deeper, and developped it a lot more, in order to understand what is really happening "behind the scene".

There are now 3 ways to read this indicator. It might look complicated at first, but the reward is to be able to anticipate and understand a lot more.

You can show/hide all the plots in the settings. So you can choose the way you prefer to use it.

----------------------------------------------------------------------------------------------------

FIRST WAY TO READ PreCy : The SIGNAL line

Go in the settings of PreCy, in "DISPLAY", uncheck "The pivot lines of the SIGNAL" and "The CYCLE areas". Make sure "The SIGNAL line" is checked.

The SIGNAL shows an estimation of the buying/selling pressure of each candle, going from 100 (100% bullish candle) to -100 (100% bearish candle). A doji would be shown close to zero.

Formula: Estimated % of buying pressure - Estimated % of selling pressure

It is a very choppy line in general, but its colors help make sense of it.

When this choppiness alternates between the extremes, then there is not much pressure on each candle, and it's very unpredictable.

When the pressure increases, the SIGNAL's amplitude changes. It "compresses", meaning there is some interest in the market. It can compress by alternating above and below zero, or it can stay above zero (bullish), or below zero (bearish) for a while.

When the SIGNAL becomes linear (in opposition to choppy), there is a lot of pressure, and it is directional. The participants agree for a move in a chosen direction.

The trajectory of the SIGNAL can help anticipate when a move is going to happen (directional increase of pressure), or stop (returning to zero) and possibly reverse (crossing zero).

Advanced uses:

The SIGNAL can make more sense on a specific timeframe, that would be aligned with the frequency of the orders at that moment. So it is a good idea to switch between timeframes until it gets less choppy, and more directional.

It is interesting to follow any regular progression of the SIGNAL, as it can reveal the intentions of the market makers to go in a certain direction discretely. There can be almost no volume and no move in the price action, yet the SIGNAL gets linear and moves away from one extreme, slowly crosses the zeroline, and pushes to the other extreme at the same time as the amplitude of the price action increases drastically.

----------------------------------------------------------------------------------------------------

SECOND WAY TO READ PreCy : The PIVOTS of the SIGNAL line

Go in the settings of PreCy, in "DISPLAY", and uncheck "The CYCLE areas". Make sure "The SIGNAL line" and "The pivot lines of the SIGNAL" are checked.

The PIVOTS help make sense of the apparent chaos of the SIGNAL. They can reveal the overall direction of the choppy moves.

Especially when the 2 PIVOTS lines are parallel and oriented.

----------------------------------------------------------------------------------------------------

THIRD WAY TO READ PreCy : The CYCLE

Go in the settings of PreCy, in "DISPLAY", and uncheck "The SIGNAL line" and "The pivot lines of the SIGNAL". Make sure "The CYCLE areas" is checked.

The CYCLE is a Moving Average of the SIGNAL in relation to each candle's size.

Formula: 6 periods Moving Average of the SIGNAL * (body of the current candle / 200 periods Moving Average of the candle's bodies)

The result goes from 200 to -200.

The CYCLE shows longer term indications of the pressures of the market.

Analysing the trajectory of the CYCLE can help predict the direction of the price.

When the CYCLE goes above or below the gray low intensity zone, it signals some interest in the move.

When the CYCLE stays above 100 or below -100, it is a sign of strength in the move.

When it stayed out of the gray low intensity zone, then returns inside it, it is a strong signal of a probable change of behavior.

----------------------------------------------------------------------------------------------------

ALERTS

In the settings, you can pick the alerts you're interested in.

To activate them, right click on the chart (or alt+a), choose "Add alert on Buying/Selling Pressure Cycle (PreCy)" then "Any alert()", then "Create".

Feel free to activate them on different timeframes. The alerts show which timeframe they are from (ex: "TF:15" for the 15 minutes TF).

I have added a lot more conditions to my PreCy, taken from FREMA Trend, for ex. You can do the same with your favorite scripts, to make PreCy more accurate for your style.

----------------------------------------------------------------------------------------------------

Borrowed scripts:

To estimate the buying and selling pressures, PreCy uses the wicks calculations of "Volume net histogram" by RafaelZioni

To filter the alerts, PreCy uses the calculations of "Amplitude" by Koholintian:

----------------------------------------------------------------------------------------------------

DO NOT BASE YOUR TRADING DECISIONS ON 1 SINGLE INDICATOR'S SIGNALS.

Always confirm your ideas by other means, like price action and indicators of a different nature.

Variance WindowsJust a quick trial at using statistical variance/standard deviation as an indicator. The general idea is that higher variance in the short term tends to indicate more volatility/movement. The other thing is that it can help set probabilistic boundaries for movements (e.g., if you set the bars to be 2 standard deviations, you are visualizing a range that denotes a 95% probability window).

I haven't really tried forming any sort of strategies around this indicator, but there are a few potential possibilities for its usability.

Generally speaking, the magnitude of the standard deviation (relative to the price) is small when the market is consolidating. It is larger when the market is trending up or own.

If the long term variance and the short-term variance are close to each other in scale, the trend is strong. Otherwise, the trend is weak. Note that I am only saying that the "trend" is strong , not that it is necessarily positive. this could be an up-trend, down-trend, or a sideways trend.

When the magnitudes of the variances are changing from very similar to very different (usually it's the long-term variance getting much larger than the short-term one), that's an indication that the previous trend is coming to an end.

Typically, it's the long-term variance that is bigger than the short-term. However, when you see them cross where the short-term is bigger or even much bigger than the long-term, it's indicative of a spike event (more often than not, one that is not favorable if you are holding any position on a given security).

Because you have probabilistic windows based on some n standard deviations from the midline (which in this version, I've used a ZLEMA as that midline), those boundaries could possibly be used to set stop-loss limits and the like.

There's nothing too complicated or deep about this particular indicator. All I'm really doing is assuming that we are dealing with a Gaussian random process. I am actually using EMA as my mean computation, even though for a proper Gaussian variance calculation, I should be using SMA. When I used SMA, though, it felt a lot more sensitive to noise, which made it feel less usable. In any case, it's just a simple first trial in many years after not having even looked at Pine Script to finally messing around with it again. Open to a litany of criticisms as I'm sure there will be many that are rightly deserved. Otherwise, happy scalping to thee.

5m Candle OverlayDescription:

The 5m Candle Overlay indicator is a powerful technical analysis tool designed to overlay 5-minute candles onto your chart. This indicator enables detailed analysis of price action within the 5-minute time frame, providing valuable insights into short-term market movements.

How it Works:

The 5m Candle Overlay indicator calculates the OHLC (Open, High, Low, Close) values specifically for the 5-minute time frame. By utilizing the request.security function, it retrieves the OHLC values for each 5-minute candle. The indicator then determines the color for each candle based on a comparison between the close and open prices. Bullish candles are assigned a green color with 75% opacity, while bearish candles are assigned a red color with 75% opacity. Additionally, the indicator checks if the current bar index is a multiple of 5 to prevent overlapping and enhance visualization.

Usage:

To effectively utilize the 5m Candle Overlay indicator, follow these steps:

1. Apply the 5m Candle Overlay indicator to your chart by adding it from the available indicators.

2. Observe the overlay of 5-minute candles on your chart, providing a detailed representation of price movements within the 5-minute time frame.

3. Interpret the candles:

- Bullish candles (green by default) indicate that the close price is higher than the open price, suggesting potential buying pressure.

- Bearish candles (red by default) indicate that the close price is lower than the open price, suggesting potential selling pressure.

4. Note that the indicator plots candles with a vertical offset every fifth indicator to prevent overlapping, ensuring clarity and ease of interpretation.

5. Combine the analysis of the 5-minute candles with other technical analysis tools, such as support and resistance levels, trend lines, or indicators from different time frames, to gain deeper insights and identify potential trade setups.

6. Implement appropriate risk management strategies, including setting stop-loss orders and position sizing, to effectively manage your trades within the 5-minute time frame and protect your capital.

Trend AngleIntroduction:

In today's post, we'll dive deep into the source code of a unique trading tool, the Trend Angle Indicator. The script is an indicator that calculates the trend angle for a given financial instrument. This powerful tool can help traders identify the strength and direction of a trend, allowing them to make informed decisions.

Overview of the Trend Angle Indicator:

The Trend Angle Indicator calculates the trend angle based on the slope of the price movement over a specified period. It uses an Exponential Moving Average (EMA) to smooth the data and an Epanechnikov kernel function for additional smoothing. The indicator provides a visual representation of the trend angle, making it easy to interpret for traders of all skill levels.

Let's break down the key components of the script:

Inputs:

Length: The number of periods to calculate the trend angle (default: 8)

Scale: A scaling factor for the ATR (Average True Range) calculation (default: 2)

Smoothing: The smoothing parameter for the Epanechnikov kernel function (default: 2)

Smoothing Factor: The radius of the Epanechnikov kernel function (default: 1)

Functions:

ema(): Exponential Moving Average calculation

atan2(): Arctangent function

degrees(): Conversion of radians to degrees

epanechnikov_kernel(): Epanechnikov kernel function for additional smoothing

Calculations:

atr: The EMA of the True Range

slope: The slope of the price movement over the given length

angle_rad: The angle of the slope in radians

degrees: The smoothed angle in degrees

Plotting:

Trend Angle: The trend angle, plotted as a line on the chart

Horizontal lines: 0, 90, and -90 degrees as reference points

How the Trend Angle Indicator Works:

The Trend Angle Indicator begins by calculating the Exponential Moving Average (EMA) of the True Range (TR) for a given financial instrument. This smooths the price data and provides a more accurate representation of the instrument's price movement.

Next, the indicator calculates the slope of the price movement over the specified length. This slope is then divided by the scaled ATR to normalize the trend angle based on the instrument's volatility. The angle is calculated using the atan2() function, which computes the arctangent of the slope.

The final step in the process is to smooth the trend angle using the Epanechnikov kernel function. This function provides additional smoothing to the trend angle, making it easier to interpret and reducing the impact of short-term price fluctuations.

Conclusion:

The Trend Angle Indicator is a powerful trading tool that allows traders to quickly and easily determine the strength and direction of a trend. By combining the Exponential Moving Average, ATR, and Epanechnikov kernel function, this indicator provides an accurate and easily interpretable representation of the trend angle. Whether you're an experienced trader or just starting, the Trend Angle Indicator can provide valuable insights into the market and help improve your trading decisions.

SuperTrend with Chebyshev FilterModified Super Trend with Chebyshev Filter

The Modified Super Trend is an innovative take on the classic Super Trend indicator. This advanced version incorporates a Chebyshev filter, which significantly enhances its capabilities by reducing false signals and improving overall signal quality. In this post, we'll dive deep into the Modified Super Trend, exploring its history, the benefits of the Chebyshev filter, and how it effectively addresses the challenges associated with smoothing, delay, and noise.

History of the Super Trend

The Super Trend indicator, developed by Olivier Seban, has been a popular tool among traders since its inception. It helps traders identify market trends and potential entry and exit points. The Super Trend uses average true range (ATR) and a multiplier to create a volatility-based trailing stop, providing traders with a dynamic tool that adapts to changing market conditions. However, the original Super Trend has its limitations, such as the tendency to produce false signals during periods of low volatility or sideways trading.

The Chebyshev Filter

The Chebyshev filter is a powerful mathematical tool that makes an excellent addition to the Super Trend indicator. It effectively addresses the issues of smoothing, delay, and noise associated with traditional moving averages. Chebyshev filters are named after Pafnuty Chebyshev, a renowned Russian mathematician who made significant contributions to the field of approximation theory.

The Chebyshev filter is capable of producing smoother, more responsive moving averages without introducing additional lag. This is possible because the filter minimizes the worst-case error between the ideal and the actual frequency response. There are two types of Chebyshev filters: Type I and Type II. Type I Chebyshev filters are designed to have an equiripple response in the passband, while Type II Chebyshev filters have an equiripple response in the stopband. The Modified Super Trend allows users to choose between these two types based on their preferences.

Overcoming the Challenges

The Modified Super Trend addresses several challenges associated with the original Super Trend:

Smoothing: The Chebyshev filter produces a smoother moving average without introducing additional lag. This feature is particularly beneficial during periods of low volatility or sideways trading, as it reduces the number of false signals.

Delay: The Chebyshev filter helps minimize the delay between price action and the generated signal, allowing traders to make timely decisions based on more accurate information.

Noise Reduction: The Chebyshev filter's ability to minimize the worst-case error between the ideal and actual frequency response reduces the impact of noise on the generated signals. This feature is especially useful when using the true range as an offset for the price, as it helps generate more reliable signals within a reasonable time frame.

The Great Replacement

The Modified Super Trend with Chebyshev filter is an excellent replacement for the original Super Trend indicator. It offers significant improvements in terms of signal quality, responsiveness, and accuracy. By incorporating the Chebyshev filter, the Modified Super Trend effectively reduces the number of false signals during low volatility or sideways trading, making it a more reliable tool for identifying market trends and potential entry and exit points.

In-Depth Guide to the Modified Super Trend Settings

The Modified Super Trend with Chebyshev filter offers a wide range of settings that allow traders to fine-tune the indicator to suit their specific trading styles and objectives. In this section, we will discuss each setting in detail, explaining its purpose and how to use it effectively.

Source

The source setting determines the price data used for calculations. The default setting is hl2, which calculates the average of the high and low prices. You can choose other price data sources such as close, open, or ohlc4 (average of open, high, low, and close prices) based on your preference.

Up Color and Down Color

These settings control the color of the trend line when the market is in an uptrend (up_color) and a downtrend (down_color). You can customize these colors to your liking, making it easier to visually identify the current market trend.

Text Color

This setting controls the color of the text displayed on the chart when using labels to indicate trend changes. You can choose any color that contrasts well with your chart background for better readability.

Mean Length

The mean_length setting determines the length (number of bars) used for the Chebyshev moving average calculation. A shorter length will make the moving average more responsive to price changes, while a longer length will produce a smoother moving average. It is crucial to find the right balance between responsiveness and smoothness, as a too-short length may generate false signals, while a too-long length might produce lagging signals. The default value is 64, but you can experiment with different values to find the optimal setting for your trading strategy.

Mean Ripple

The mean_ripple setting influences the Chebyshev filter's ripple effect in the passband (Type I) or stopband (Type II). The ripple effect represents small oscillations in the frequency response, which can impact the moving average's smoothness. The default value is 0.01, but you can experiment with different values to find the best balance between smoothness and responsiveness.

Chebyshev Type: Type I or Type II

The style setting allows you to choose between Type I and Type II Chebyshev filters. Type I filters have an equiripple response in the passband, while Type II filters have an equiripple response in the stopband. Depending on your preference for smoothness and responsiveness, you can choose the type that best fits your trading style.

ATR Style

The atr_style setting determines the method used for calculating the Average True Range (ATR). By default (false), it uses the traditional high-low range. When set to true, it uses the absolute difference between the open and close prices. You can choose the method that works best for your trading strategy and the market you are trading.

ATR Length

The atr_length setting controls the length (number of bars) used for calculating the ATR. Similar to the mean_length, a shorter length will make the ATR more responsive to price changes, while a longer length will produce a smoother ATR. The default value is 64, but you can experiment with different values to find the optimal setting for your trading strategy.

ATR Ripple

The atr_ripple setting, like the mean_ripple, influences the ripple effect of the Chebyshev filter used in the ATR calculation. The default value is 0.05, but you can experiment with different values to find the best balance between smoothness and responsiveness.

Multiplier

The multiplier setting determines the factor by which the ATR is multiplied before being added

Super Trend Logic and Signal Optimization

The Modified Super Trend with Chebyshev filter is designed to minimize false signals and provide a clear indication of market trends. It does so by using a combination of moving averages, Average True Range (ATR), and a multiplier. In this section, we will discuss the Super Trend's logic, its ability to prevent false signals, and the early warning crosses added to the indicator.

Super Trend Logic

The Super Trend's logic is based on a combination of the Chebyshev moving average and ATR. The Chebyshev moving average is a smooth moving average that effectively filters out market noise, while the ATR is a measure of market volatility.

The Super Trend is calculated by adding or subtracting a multiple of the ATR from the Chebyshev moving average. The multiplier is a user-defined value that determines the distance between the trend line and the price action. A larger multiplier results in a wider channel, reducing the likelihood of false signals but potentially missing out on valid trend changes.

Preventing False Signals

The Super Trend is designed to minimize false signals by maintaining its trend direction until a significant change in the market occurs. In a downtrend, the trend line will only decrease in value, and in an uptrend, it will only increase. This helps prevent false signals caused by temporary price fluctuations or market noise.

When the price crosses the trend line, the Super Trend does not immediately change its direction. Instead, it employs a safety logic to ensure that the trend change is genuine. The safety logic checks if the new trend line (calculated using the updated moving average and ATR) is more extreme than the previous one. If it is, the trend line is updated; otherwise, the previous trend line is maintained. This mechanism further reduces the likelihood of false signals by ensuring that the trend line only changes when there is a significant shift in the market.

Early Warning Crosses

To provide traders with additional insight, the Modified Super Trend with Chebyshev filter includes early warning crosses. These crosses are plotted on the chart when the price crosses the trend line without the safety logic. Although these crosses do not necessarily indicate a trend change, they can serve as a valuable heads-up for traders to monitor the market closely and prepare for potential trend reversals.

In conclusion, the Modified Super Trend with Chebyshev filter offers a significant improvement over the original Super Trend indicator. By incorporating the Chebyshev filter, this modified version effectively addresses the challenges of smoothing, delay, and noise reduction while minimizing false signals. The wide range of customizable settings allows traders to tailor the indicator to their specific needs, while the inclusion of early warning crosses provides valuable insight into potential trend reversals.

Ultimately, the Modified Super Trend with Chebyshev filter is an excellent tool for traders looking to enhance their trend identification and decision-making abilities. With its advanced features, this indicator can help traders navigate volatile markets with confidence, making more informed decisions based on accurate, timely information.

Stochastic Chebyshev Smoothed With Zero Lag SmoothingFast and Smooth Stochastic Oscillator with Zero Lag

Introduction

In this post, we will discuss a custom implementation of a Stochastic Oscillator that not only smooths the signal but also does so without introducing any noticeable lag. This is a remarkable achievement, as it allows for a fast Stochastic Oscillator that is less prone to false signals without being slow and sluggish.

We will go through the code step by step, explaining the various functions and the overall structure of the code.

First, let's start with a brief overview of the Stochastic Oscillator and the problem it addresses.

Background

The Stochastic Oscillator is a momentum indicator used in technical analysis to determine potential overbought or oversold conditions in an asset's price. It compares the closing price of an asset to its price range over a specified period. However, the Stochastic Oscillator is susceptible to false signals due to its sensitivity to price movements. This is where our custom implementation comes in, offering a smoother signal without noticeable lag, thus reducing the number of false signals.

Despite its popularity and widespread use in technical analysis, the Stochastic Oscillator has its share of drawbacks. While it is a price scaler that allows for easier comparisons across different assets and timeframes, it is also known for generating false signals, which can lead to poor trading decisions. In this section, we will delve deeper into the limitations of the Stochastic Oscillator and discuss the challenges associated with smoothing to mitigate its drawbacks.

Limitations of the Stochastic Oscillator

False Signals: The primary issue with the Stochastic Oscillator is its tendency to produce false signals. Since it is a momentum indicator, it reacts to short-term price movements, which can lead to frequent overbought and oversold signals that do not necessarily indicate a trend reversal. This can result in traders entering or exiting positions prematurely, incurring losses or missing out on potential gains.

Sensitivity to Market Noise: The Stochastic Oscillator is highly sensitive to market noise, which can create erratic signals in volatile markets. This sensitivity can make it difficult for traders to discern between genuine trend reversals and temporary fluctuations.

Lack of Predictive Power: Although the Stochastic Oscillator can help identify potential overbought and oversold conditions, it does not provide any information about the future direction or strength of a trend. As a result, it is often used in conjunction with other technical analysis tools to improve its predictive power.

Challenges of Smoothing the Stochastic Oscillator

To address the limitations of the Stochastic Oscillator, many traders attempt to smooth the indicator by applying various techniques. However, these approaches are not without their own set of challenges:

Trade-off between Smoothing and Responsiveness: The process of smoothing the Stochastic Oscillator inherently involves reducing its sensitivity to price movements. While this can help eliminate false signals, it can also result in a less responsive indicator, which may not react quickly enough to genuine trend reversals. This trade-off can make it challenging to find the optimal balance between smoothing and responsiveness.

Increased Complexity: Smoothing techniques often involve the use of additional mathematical functions and algorithms, which can increase the complexity of the indicator. This can make it more difficult for traders to understand and interpret the signals generated by the smoothed Stochastic Oscillator.

Lagging Signals: Some smoothing methods, such as moving averages, can introduce a time lag into the Stochastic Oscillator's signals. This can result in late entry or exit points, potentially reducing the profitability of a trading strategy based on the smoothed indicator.

Overfitting: In an attempt to eliminate false signals, traders may over-optimize their smoothing parameters, resulting in a Stochastic Oscillator that is overfitted to historical data. This can lead to poor performance in real-time trading, as the overfitted indicator may not accurately reflect the dynamics of the current market.

In our custom implementation of the Stochastic Oscillator, we used a combination of Chebyshev Type I Moving Average and zero-lag Gaussian-weighted moving average filters to address the indicator's limitations while preserving its responsiveness. In this section, we will discuss the reasons behind selecting these specific filters and the advantages of using the Chebyshev filter for our purpose.

Filter Selection

Chebyshev Type I Moving Average: The Chebyshev filter was chosen for its ability to provide a smoother signal without sacrificing much responsiveness. This filter is designed to minimize the maximum error between the original and the filtered signal within a specific frequency range, effectively reducing noise while preserving the overall shape of the signal. The Chebyshev Type I Moving Average achieves this by allowing a specified amount of ripple in the passband, resulting in a more aggressive filter roll-off and better noise reduction compared to other filters, such as the Butterworth filter.

Zero-lag Gaussian-weighted Moving Average: To further improve the Stochastic Oscillator's performance without introducing noticeable lag, we used the zero-lag Gaussian-weighted moving average (GWMA) filter. This filter combines the benefits of a Gaussian-weighted moving average, which prioritizes recent data points by assigning them higher weights, with a zero-lag approach that minimizes the time delay in the filtered signal. The result is a smoother signal that is less prone to false signals and is more responsive than traditional moving average filters.

Advantages of the Chebyshev Filter

Effective Noise Reduction: The primary advantage of the Chebyshev filter is its ability to effectively reduce noise in the Stochastic Oscillator signal. By minimizing the maximum error within a specified frequency range, the Chebyshev filter suppresses short-term fluctuations that can lead to false signals while preserving the overall trend.

Customizable Ripple Factor: The Chebyshev Type I Moving Average allows for a customizable ripple factor, enabling traders to fine-tune the filter's aggressiveness in reducing noise. This flexibility allows for better adaptability to different market conditions and trading styles.

Responsiveness: Despite its effective noise reduction, the Chebyshev filter remains relatively responsive compared to other smoothing filters. This responsiveness allows for more accurate detection of genuine trend reversals, making it a suitable choice for our custom Stochastic Oscillator implementation.

Compatibility with Zero-lag Techniques: The Chebyshev filter can be effectively combined with zero-lag techniques, such as the Gaussian-weighted moving average filter used in our custom implementation. This combination results in a Stochastic Oscillator that is both smooth and responsive, with minimal lag.

Code Overview

The code begins with defining custom mathematical functions for hyperbolic sine, cosine, and their inverse functions. These functions will be used later in the code for smoothing purposes.

Next, the gaussian_weight function is defined, which calculates the Gaussian weight for a given 'k' and 'smooth_per'. The zero_lag_gwma function calculates the zero-lag moving average with Gaussian weights. This function is used to create a Gaussian-weighted moving average with minimal lag.

The chebyshevI function is an implementation of the Chebyshev Type I Moving Average, which is used for smoothing the Stochastic Oscillator. This function takes the source value (src), length of the moving average (len), and the ripple factor (ripple) as input parameters.

The main part of the code starts by defining input parameters for K and D smoothing and ripple values. The Stochastic Oscillator is calculated using the ta.stoch function with Chebyshev smoothed inputs for close, high, and low. The result is further smoothed using the zero-lag Gaussian-weighted moving average function (zero_lag_gwma).

Finally, the lag variable is calculated using the Chebyshev Type I Moving Average for the Stochastic Oscillator. The Stochastic Oscillator and the lag variable are plotted on the chart, along with upper and lower bands at 80 and 20 levels, respectively. A fill is added between the upper and lower bands for better visualization.

Conclusion

The custom Stochastic Oscillator presented in this blog post combines the Chebyshev Type I Moving Average and zero-lag Gaussian-weighted moving average filters to provide a smooth and responsive signal without introducing noticeable lag. This innovative implementation results in a fast Stochastic Oscillator that is less prone to false signals, making it a valuable tool for technical analysts and traders alike.

However, it is crucial to recognize that the Stochastic Oscillator, despite being a price scaler, has its limitations, primarily due to its propensity for generating false signals. While smoothing techniques, like the ones used in our custom implementation, can help mitigate these issues, they often introduce new challenges, such as reduced responsiveness, increased complexity, lagging signals, and the risk of overfitting.

The selection of the Chebyshev Type I Moving Average and zero-lag Gaussian-weighted moving average filters was driven by their combined ability to provide a smooth and responsive signal while minimizing false signals. The advantages of the Chebyshev filter, such as effective noise reduction, customizable ripple factor, and responsiveness, make it an excellent fit for addressing the limitations of the Stochastic Oscillator.

When using the Stochastic Oscillator, traders should be aware of these limitations and challenges, and consider incorporating other technical analysis tools and techniques to supplement the indicator's signals. This can help improve the overall accuracy and effectiveness of their trading strategies, reducing the risk of losses due to false signals and other limitations associated with the Stochastic Oscillator.

Feel free to use, modify, or improve upon this custom Stochastic Oscillator code in your trading strategies. We hope this detailed walkthrough of the custom Stochastic Oscillator, its limitations, challenges, and filter selection has provided you with valuable insights and a better understanding of how it works. Happy trading!

Chebyshev type I and II FilterTitle: Chebyshev Type I and II Filters: Smoothing Techniques for Technical Analysis

Introduction:

In technical analysis, smoothing techniques are used to remove noise from a time series data. They help to identify trends and improve the readability of charts. One such powerful smoothing technique is the Chebyshev Type I and II Filters. In this post, we will dive deep into the Chebyshev filters, discuss their significance, and explain the differences between Type I and Type II filters.

Chebyshev Filters:

Chebyshev filters are a class of infinite impulse response (IIR) filters that are widely used in signal processing applications. They are known for their ability to provide a sharper cutoff between the passband and the stopband compared to other filter types, such as Butterworth filters. The Chebyshev filters are named after the Russian mathematician Pafnuty Chebyshev, who created the Chebyshev polynomials that form the basis for these filters.

The two main types of Chebyshev filters are:

1. Chebyshev Type I filters: These filters have an equiripple passband, which means they have equal and constant ripple within the passband. The advantage of Type I filters is that they usually provide a faster roll-off rate between the passband and the stopband compared to other filter types. However, the trade-off is that they may have larger ripples in the passband, resulting in a less smooth output.

2. Chebyshev Type II filters: These filters have an equiripple stopband, which means they have equal and constant ripple within the stopband. The advantage of Type II filters is that they provide a more controlled output by minimizing the ripple in the passband. However, this comes at the cost of a slower roll-off rate between the passband and the stopband compared to Type I filters.

Why Choose Chebyshev Filters for Smoothing?

Chebyshev filters are an excellent choice for smoothing in technical analysis due to their ability to provide a sharper transition between the passband and the stopband. This sharper transition helps in preserving the essential features of the underlying data while effectively removing noise. The two types of Chebyshev filters offer different trade-offs between the smoothness of the output and the roll-off rate, allowing users to choose the one that best suits their requirements.

Implementing Chebyshev Filters:

In the Pine Script language, we can implement the Chebyshev Type I and II filters using custom functions. We first define the custom hyperbolic functions cosh, acosh, sinh, and asinh, as well as the inverse tangent function atan. These functions are essential for calculating the filter coefficients.

Next, we create two separate functions for the Chebyshev Type I and II filters, named chebyshevI and chebyshevII, respectively. Each function takes three input parameters: the source data (src), the filter length (len), and the ripple value (ripple). The ripple value determines the amount of ripple in the passband for Type I filters and in the stopband for Type II filters. A higher ripple value results in a faster roll-off rate but may lead to a less smooth output.

Finally, we create a main function called chebyshev, which takes an additional boolean input parameter named style. If the style parameter is set to false, the function calculates the Chebyshev Type I filter using the chebyshevI function. If the style parameter is set to true, the function calculates the Chebyshev Type II filter using the chebyshevII function.

By adjusting the input parameters, users can choose the type of Chebyshev filter and configure its characteristics to suit their needs.

Conclusion:

The Chebyshev Type I and II filters are powerful smoothing techniques that can be used in technical analysis to remove noise from time series data. They offer a sharper transition between the passband and the stopband compared to other filter types, which helps in preserving the essential features of the data while effectively reducing noise. By implementing these filters in Pine Script, traders can easily integrate them into their trading strategies and improve the readability of their charts.

Endpointed SSA of Price [Loxx]The Endpointed SSA of Price: A Comprehensive Tool for Market Analysis and Decision-Making

The financial markets present sophisticated challenges for traders and investors as they navigate the complexities of market behavior. To effectively interpret and capitalize on these complexities, it is crucial to employ powerful analytical tools that can reveal hidden patterns and trends. One such tool is the Endpointed SSA of Price, which combines the strengths of Caterpillar Singular Spectrum Analysis, a sophisticated time series decomposition method, with insights from the fields of economics, artificial intelligence, and machine learning.

The Endpointed SSA of Price has its roots in the interdisciplinary fusion of mathematical techniques, economic understanding, and advancements in artificial intelligence. This unique combination allows for a versatile and reliable tool that can aid traders and investors in making informed decisions based on comprehensive market analysis.

The Endpointed SSA of Price is not only valuable for experienced traders but also serves as a useful resource for those new to the financial markets. By providing a deeper understanding of market forces, this innovative indicator equips users with the knowledge and confidence to better assess risks and opportunities in their financial pursuits.

█ Exploring Caterpillar SSA: Applications in AI, Machine Learning, and Finance

Caterpillar SSA (Singular Spectrum Analysis) is a non-parametric method for time series analysis and signal processing. It is based on a combination of principles from classical time series analysis, multivariate statistics, and the theory of random processes. The method was initially developed in the early 1990s by a group of Russian mathematicians, including Golyandina, Nekrutkin, and Zhigljavsky.

Background Information:

SSA is an advanced technique for decomposing time series data into a sum of interpretable components, such as trend, seasonality, and noise. This decomposition allows for a better understanding of the underlying structure of the data and facilitates forecasting, smoothing, and anomaly detection. Caterpillar SSA is a particular implementation of SSA that has proven to be computationally efficient and effective for handling large datasets.

Uses in AI and Machine Learning:

In recent years, Caterpillar SSA has found applications in various fields of artificial intelligence (AI) and machine learning. Some of these applications include:

1. Feature extraction: Caterpillar SSA can be used to extract meaningful features from time series data, which can then serve as inputs for machine learning models. These features can help improve the performance of various models, such as regression, classification, and clustering algorithms.

2. Dimensionality reduction: Caterpillar SSA can be employed as a dimensionality reduction technique, similar to Principal Component Analysis (PCA). It helps identify the most significant components of a high-dimensional dataset, reducing the computational complexity and mitigating the "curse of dimensionality" in machine learning tasks.

3. Anomaly detection: The decomposition of a time series into interpretable components through Caterpillar SSA can help in identifying unusual patterns or outliers in the data. Machine learning models trained on these decomposed components can detect anomalies more effectively, as the noise component is separated from the signal.

4. Forecasting: Caterpillar SSA has been used in combination with machine learning techniques, such as neural networks, to improve forecasting accuracy. By decomposing a time series into its underlying components, machine learning models can better capture the trends and seasonality in the data, resulting in more accurate predictions.

Application in Financial Markets and Economics:

Caterpillar SSA has been employed in various domains within financial markets and economics. Some notable applications include:

1. Stock price analysis: Caterpillar SSA can be used to analyze and forecast stock prices by decomposing them into trend, seasonal, and noise components. This decomposition can help traders and investors better understand market dynamics, detect potential turning points, and make more informed decisions.

2. Economic indicators: Caterpillar SSA has been used to analyze and forecast economic indicators, such as GDP, inflation, and unemployment rates. By decomposing these time series, researchers can better understand the underlying factors driving economic fluctuations and develop more accurate forecasting models.

3. Portfolio optimization: By applying Caterpillar SSA to financial time series data, portfolio managers can better understand the relationships between different assets and make more informed decisions regarding asset allocation and risk management.

Application in the Indicator:

In the given indicator, Caterpillar SSA is applied to a financial time series (price data) to smooth the series and detect significant trends or turning points. The method is used to decompose the price data into a set number of components, which are then combined to generate a smoothed signal. This signal can help traders and investors identify potential entry and exit points for their trades.

The indicator applies the Caterpillar SSA method by first constructing the trajectory matrix using the price data, then computing the singular value decomposition (SVD) of the matrix, and finally reconstructing the time series using a selected number of components. The reconstructed series serves as a smoothed version of the original price data, highlighting significant trends and turning points. The indicator can be customized by adjusting the lag, number of computations, and number of components used in the reconstruction process. By fine-tuning these parameters, traders and investors can optimize the indicator to better match their specific trading style and risk tolerance.

Caterpillar SSA is versatile and can be applied to various types of financial instruments, such as stocks, bonds, commodities, and currencies. It can also be combined with other technical analysis tools or indicators to create a comprehensive trading system. For example, a trader might use Caterpillar SSA to identify the primary trend in a market and then employ additional indicators, such as moving averages or RSI, to confirm the trend and generate trading signals.

In summary, Caterpillar SSA is a powerful time series analysis technique that has found applications in AI and machine learning, as well as financial markets and economics. By decomposing a time series into interpretable components, Caterpillar SSA enables better understanding of the underlying structure of the data, facilitating forecasting, smoothing, and anomaly detection. In the context of financial trading, the technique is used to analyze price data, detect significant trends or turning points, and inform trading decisions.

█ Input Parameters

This indicator takes several inputs that affect its signal output. These inputs can be classified into three categories: Basic Settings, UI Options, and Computation Parameters.

Source: This input represents the source of price data, which is typically the closing price of an asset. The user can select other price data, such as opening price, high price, or low price. The selected price data is then utilized in the Caterpillar SSA calculation process.

Lag: The lag input determines the window size used for the time series decomposition. A higher lag value implies that the SSA algorithm will consider a longer range of historical data when extracting the underlying trend and components. This parameter is crucial, as it directly impacts the resulting smoothed series and the quality of extracted components.

Number of Computations: This input, denoted as 'ncomp,' specifies the number of eigencomponents to be considered in the reconstruction of the time series. A smaller value results in a smoother output signal, while a higher value retains more details in the series, potentially capturing short-term fluctuations.

SSA Period Normalization: This input is used to normalize the SSA period, which adjusts the significance of each eigencomponent to the overall signal. It helps in making the algorithm adaptive to different timeframes and market conditions.

Number of Bars: This input specifies the number of bars to be processed by the algorithm. It controls the range of data used for calculations and directly affects the computation time and the output signal.

Number of Bars to Render: This input sets the number of bars to be plotted on the chart. A higher value slows down the computation but provides a more comprehensive view of the indicator's performance over a longer period. This value controls how far back the indicator is rendered.

Color bars: This boolean input determines whether the bars should be colored according to the signal's direction. If set to true, the bars are colored using the defined colors, which visually indicate the trend direction.

Show signals: This boolean input controls the display of buy and sell signals on the chart. If set to true, the indicator plots shapes (triangles) to represent long and short trade signals.

Static Computation Parameters:

The indicator also includes several internal parameters that affect the Caterpillar SSA algorithm, such as Maxncomp, MaxLag, and MaxArrayLength. These parameters set the maximum allowed values for the number of computations, the lag, and the array length, ensuring that the calculations remain within reasonable limits and do not consume excessive computational resources.

█ A Note on Endpionted, Non-repainting Indicators

An endpointed indicator is one that does not recalculate or repaint its past values based on new incoming data. In other words, the indicator's previous signals remain the same even as new price data is added. This is an important feature because it ensures that the signals generated by the indicator are reliable and accurate, even after the fact.

When an indicator is non-repainting or endpointed, it means that the trader can have confidence in the signals being generated, knowing that they will not change as new data comes in. This allows traders to make informed decisions based on historical signals, without the fear of the signals being invalidated in the future.

In the case of the Endpointed SSA of Price, this non-repainting property is particularly valuable because it allows traders to identify trend changes and reversals with a high degree of accuracy, which can be used to inform trading decisions. This can be especially important in volatile markets where quick decisions need to be made.

Stophunt WickAcknowledgement

This indicator is dedicated to my friend Alexandru who saved me from one of these liquidation raids which almost liquidated me.

Alexandru is one of the best scalpers out there and he always nails his entries at the tip of these wicks.

This inspired me to create this indicator.

What's a Liquidation Wick?

It's that fast stop-hunting wick that stophunts everyone by triggering their stop-loss and liquidation.

Liquidity is the lifeblood of stock market and liquidation is the process that moves price.

This indicator will identify when a liquidity pool is getting raided to trigger buy or sell stops, they are also know as stop-hunts.

How does it work?

When market consolidates in one direction, it builds up liquidity zones.

Market maker will break out of these consolidation phases by having dramatic price action to either pump or dump to raid these liquidity zones.

This is also called stop-hunts or liquidity raids. After that it will start reversing back to the opposite direction.

This is most noticeable by the length of the wick of a given candle in a very short amount of time and the total size of the candle.

This indicator highlights them accordingly.

Settings

Wick and Candle ratio works with default values but finetune will enhance user experience and usability.

Wick Ratio: Size of the wick compared to body of a candle.

Adjust this to higher ratio on smaller timeframe or smaller ratio on bigger timeframe to your trading style to spot a trend reversal.

Candle Ratio: The size of the candle, by default it is 0.75% of the current price.

For example, if BTC is at 20,000 then the size of the candle has to be minimum 150.

This can be fine tuned to bigger candle size on higher time frames or smaller for shorter timeframe depending on the trade type.

How to use it?

This indicator will identify when a liquidity pool is getting raided to trigger buy or sell stops, they are also know as stop-hunts. It can be used of its own for scalping but there are also a good few indicators which would most definitely help to confluence bigger timeframe trades.

Scalp

This indicator shows the most chaotic moments in price action; therefore it works best on smaller timeframes, ideally 3 or 5 minute candle.

- Wait for the market to start pumping or dumping.

- Current candle will change colour (Bullish/Bearish).

- Enter trade as soon as price starts to reverse back.

- Place the stop-loss outside of the current candle.

- Wait for the Liquidation Wick to appear as confirmation.

Price is very chaotic during a liquidity stop-hunt raid but there is a saying:

"In the midst of chaos, there is also opportunity" - Sun-Tzu

Since this is a very high risk, high reward strategy; it is advised to practice on paper trade first.

Practice until perfection and this indicator would be the perfect bread and butter scalp confirmation.

Fair Value Gap

FVG strategy is the most accurate in conjunction with this indicator.

Normally price would reverse after consuming fair value gaps but often it's difficult to know when and where.

This indicator would identify those crucial entry points for reverse course direction of the price action.

Support and Resistance

This indicator can also be used in conjunction with support and resistance lines.

Generally the stophunt will go deep below the support or spike much further up the resistance lines to liquidate positions.

Bollinger Bands

Bolling Bands strategy would be to wait until the price breaks out of the band.

Once the wick is formed, it would be an ideal entry point.

Script change

This is an open-source script and feel free to modify according to your need and to amplify your existing strategy.

Bar Move Probability Price Levels (BMPPL)Hello fellow traders! I am thrilled to present my latest creation, the Bar Move Probability Price Levels (BMPPL) indicator. This powerful tool offers a statistical edge in your trading by helping you understand the likelihood of price movements at multiple levels based on historical data. In this post, I'll provide an overview of the indicator, its features, and how it can enhance your trading experience. Let's dive in!

What is the Bar Move Probability Price Levels Indicator?

The Bar Move Probability Price Levels (BMPPL) indicator is a versatile tool that calculates the probability of a bar's price movement at multiple levels, either up or down, based on past occurrences of similar price movements. This comprehensive approach can provide valuable insights into the potential direction of the market, allowing you to make better-informed trading decisions.

One of the standout features of the BMPPL indicator is its flexibility. You can choose to see the probabilities of reaching various price levels, or you can focus on the highest probability move by adjusting the "Max Number of Elements" and "Step Size" settings. This flexibility ensures that the indicator caters to your specific trading style and requirements.

Max Number of Elements and Step Size: Fine-Tuning Your BMPPL Indicator

The BMPPL indicator allows you to customize its output to suit your trading style and requirements through two key settings: Max Number of Elements and Step Size.

Max Number of Elements: This setting determines the maximum number of price levels displayed by the indicator. By default, it is set to 1000, meaning the indicator will show probabilities for up to 1000 price levels. You can adjust this setting to limit the number of price levels displayed, depending on your preference and trading strategy.

Step Size: The Step Size setting determines the increment between displayed price levels. By default, it is set to 100, which means the indicator will display probabilities for every 100th price level. Adjusting the Step Size allows you to control the granularity of the displayed probabilities, enabling you to focus on specific price movements.

By adjusting the Max Number of Elements and Step Size settings, you can fine-tune the BMPPL indicator to focus on the most relevant price levels for your trading strategy. For example, if you want to concentrate on the highest probability move, you can set the Max Number of Elements to 1 and the Step Size to 1. This will cause the indicator to display only the price level with the highest probability, simplifying your trading decisions.

Probability Calculation: Understanding the Core Concept

The BMPPL indicator calculates the probability of a bar's price movement by analyzing historical price changes and comparing them to the current price change (in percentage). The indicator maintains separate arrays for green (bullish) and red (bearish) price movements and their corresponding counts.

When a new bar is formed, the indicator checks whether the price movement (in percentage) is already present in the respective array. If it is, the corresponding count is updated. Otherwise, a new entry is added to the array, with an initial count of 1.

Once the historical data has been analyzed, the BMPPL indicator calculates the probability of each price movement by dividing the count of each movement by the sum of all counts. These probabilities are then stored in separate arrays for green and red movements.

Utilizing BMPPL Indicator Settings Effectively

To make the most of the BMPPL indicator, it's essential to understand how to use the Max Number of Elements and Step Size settings effectively:

Identify your trading objectives: Before adjusting the settings, it's crucial to know what you want to achieve with your trades. Are you targeting specific price levels or focusing on high-probability moves? Identifying your objectives will help you determine the appropriate settings.

Start with the default settings: The default settings provide a broad overview of price movement probabilities. Start by analyzing these settings to gain a general understanding of the market behavior.

Adjust the settings according to your objectives: Once you have a clear understanding of your trading objectives, adjust the Max Number of Elements and Step Size settings accordingly. For example, if you want to focus on the highest probability move, set both settings to 1.

Experiment and refine: As you gain experience with the BMPPL indicator, continue to experiment with different combinations of Max Number of Elements and Step Size settings. This will help you find the optimal configuration that aligns with your trading strategy and risk tolerance. Remember to continually evaluate your trading results and refine your settings as needed.

Combine with other technical analysis tools: While the BMPPL indicator provides valuable insights on its own, combining it with other technical analysis tools can further enhance your trading strategy. Use additional indicators and chart patterns to confirm your analysis and improve the accuracy of your trades.

Monitor and adjust: Market conditions are constantly changing, and it's crucial to stay adaptive. Keep monitoring the market and adjust your BMPPL settings as necessary to ensure they remain relevant and effective in the current market environment.